Demons are those supernatural entities that need little introduction.

Everyone knows what a demon is. Everyone did in every culture throughout history, since the Paleolithic era. Some of us even have their own personal demons, today. I call mine Stephen - we are good friends.

Being this popular, it would be only a matter of time before these creatures made their way into the sciences. According to folklore, insanity is one of those things that tends to attract demons and they couldn’t have picked a better place to manifest than within the madness of 19th century thermodynamics.

However, before we get to that, a small recap of what’s been happening so far.

Previously on…

In the last episode we saw Rudolf Clausius being really bothered by the fact that we didn’t really understand the true nature of thermodynamical phenomena. Sure, heat got transferred, engines were performing work and we could follow that mathematically. However, no one could explain why were these things occurring in the first place. What was really happening to matter, that allowed things like that to happen?

To wrestle with this, Clausius ventured that perhaps all of it was due to particles of matter moving around and changing their arrangements. In order to quantify the degree of those microscopic changes he introduced the concept of entropy.

After staring for an uncomfortably long time at the Carnot engine, he defined entropy as a transfer of heat (or heat-equivalent work) over some temperature, at which that change occurs, so Q/T.

He also showed that this quantity must always be at least 0, globally. This, boring result quickly led us to a fun realization of the inevitable demise of our Universe into a cold, dark void of nothingness.

But let’s not dwell on that.

This chain of thought allowed Clausius to say why thermodynamical processes tended to occur in certain ways and not others. At the same time he managed to confuse the hell out of everyone to this day about what the entropy is.

And now you’re all caught up.

A sound speed

Clausius was definitely on a roll and the tornado of reasoning, that swept his mind, did not stop there. He realized that if transferring heat and doing work was supposed to be the result of particle motion, then we should be able to link some thermodynamical properties of matter with the kinematics of its components.

If only someone had derived, oh I don’t know, the relationship between gas pressure and the velocity of its molecules from Newtonian laws of motion…

Oh yeah… Bernoulli did that over a 100 years ago, but everyone was like: “Nah, that’s stupid, because matter is not made of particles.“ I guess people weren’t ready for that back then.

However, times have changed. Molecules forming all matter was slowly becoming the talk of the town and you couldn’t find a bar fight that didn’t start with someone badmouthing someone else’s particles. Sensing the change, Clausius decided to revive Bernoulli’s work.

With the assumptions that the gas is made of many (N) molecules, each and all with a single mass (m), a single speed (small v) and that these molecules never collide with each other, the relationship between pressure and molecule velocity became:

where P is the pressure and large V the volume of the gas. This was an awesome result, because it expressed everything in terms of Newtonian mechanics, which everyone accepted and knew so well. For a while, life was grand.

But, as expected, questions started popping up. That speed in the equation - people were curious to know what the value of that was. One of those people was a Dutch meteorologist, Christophorus Henricus Diedericus Buijs Ballot. Besides taking forever to introduce himself, he plugged in some numbers from various experiments and got an interesting value in the end.

The result was that v is similar to the speed of sound.

How bizarre, right? After all, air didn’t seem to just rush at people at the speed of sound in normal circumstances. You don’t get swept away every time you open a door or window.

Or take diffusion as an example. The spontaneous mixing of different gasses progressed much more slowly, as could be witnessed by literally anyone. Next time you spray some perfume on yourself, notice that you hear the sound of the spray before you smell the perfume itself.

The result didn’t seem to agree with reality and Christophorus Henricus Diedericus Buijs Ballot was especially anal about that diffusion issue. However, Clausius was no dummy and he was not going to abandon particle motion just like that.

Captain Thermodynamics had a prompt response, but it seemed that he could not avoid collisions between air molecules after all. He imagined that a molecule can only travel so far before it bumps into its neighbor. However, trying to apply Newtonian mechanics to every single particle in order to track their collisions is as close to insanity as one can get.

The solution? The “mean free path“, or the average distance a particle moves before a collision occurs.

Wait… mean and average - these are not words that describe dynamics. These are words those awful statisticians use!

Ughh… you know what that means, right? All hopes of just applying Newton’s laws and calling it a day are gone. Reality had other plans and now we have to do … probabilities!

Ok, fine! So how does the mean free path help us and how do we make Christophorus Henricus Diedericus Buijs Ballot go away?

To answer that, let’s pretend that we can follow the path of a single particle (starting at the red point) colliding among countless other ones (blue circle).

Pretty chaotic and it obviously struggles to make progress in leaving the circle. Each line segment is, more or less, the length of the mean free path. The direction of each subsequent segment is random - particles don’t have preferences. Also we are not touching the original speeds. Each segment is traversed at about the speed of sound, just like Clausius told us originally.

We know that the particle (of your favorite perfume) should get somewhere eventually, like into your nose. That means it has to have some effective speed. What could that be?

Well, this particle made the pattern in the picture above. Another one will make a different one and so on. On average, after a set number of collisions, there has to be some final position these particles should have (let’s call it D).

Glossing over some statistics, it turns out that D is:

where N is the number of collisions and L is the mean free path. Now, let’s extract speed from that.

If the particle travels at the speed of sound (vs) and makes the L distance before a collision, then a single collision occurs every L/vs seconds. That means N = vs/L collisions in every 1 second. Take vs = 330 m/s, L = 1e-7 m, for gasses and put that into D. What you get is D is around a centimeter progress per 1 second.

Now, with the collisions represented by the mean free path, diffusion makes sense again. Yes, the molecules race at the speed of sound, but the constant collisions make it difficult for them to go anywhere. The price we paid is that we had to juggle kinematics and statistics at the same time.

Wanna know what’s cool about diffusion? It also applies to light.

What we’ve seen above is what happens to particles of light (photons) when they try to leave the Sun. They try to get out of the fusion furnace of the star, but constantly bump into atoms and can’t seem to get out.

In the case of light L is about 1 centimeter, the speed of the particle is the speed of, well, light. If we want the photon to escape the Sun we need to set D to be the the size of the star itself, so around 700,000 km. Plug those number in and what you get is that a single photon created in the center of the Sun needs about 10,000 years to get to the surface.

Right, let’s get back to Earth and tell Christophorus Henricus Diedericus Buijs Ballot that he can now piss off and go start an International Meteorological Organization or something.

Maxwell SMASH!

So this new approach to thermodynamics is making serious progress, but there’s more work to be done.

Everybody suspected that it is highly unlikely that all particles of a gas move with the same velocity. In reality, they probably form a distribution of various velocities. That means more statistics - yeeeey! Let’s avoid some considerable embarrassment and hand it over to the pros.

It turned out that, at the time, none were more pro than James Clerk Maxwell.

No time for a biography, here. This is not the last time I will write about Maxwell, but just know that he published his first scientific paper at the age of 14. With that depressing thought, let’s move on.

Clausius’ acknowledgement of molecular collisions told Maxwell, that such collisions must somehow alter the velocities of the particles.

By pretending the colliding particles are hard spheres, just like smashing billiard balls, he was able to derive, the (now famous) formula, which tells us what is the probability of finding a particle per unit speed (v). It looks like this:

The a is a constant and not important right now. The shape is what matters most.

We will find a couple of slow particles on the left, very few super fast all the way to the right and most of them down the middle. At room temperature, the majority are speeding at about 440 m/s, so even faster than the speed of sound in the same conditions.

But are we supposed to take Maxwell’s result at face value? No.

Let’s see if he’s right about that. Let’s put some gas particles in a box and see what happens. We will give them all the same speed to start with, but launch them in random directions. We’ll visualize the particles colliding (on the left) and monitor the distribution of their speeds (on the right) in real time.

As time goes by, the initial speeds (the tall spike) disappear and form something resembling Maxwell’s curve, as above.

So yeah, Maxwell seems to have nailed it and this fusion of kinematics and statistics, in the context of thermodynamics, seemed like the way to go.

However, it also came with its own set of new mysteries.

For example, all these considerations were only about translational motion of the particles, so back and forward, left to right, top to bottom. One could ask if that’s all there is. What if the particles were also rotating around some axis, because why not?

Also, what if collisions weren’t the only possible interaction? What if there were other options, as well, like mutual attraction or repulsion of particles? All seemed like reasonable possibilities.

There was a growing suspicion that there is more to it. It became especially apparent when dealing with specific heats. Specific heat tells us how much energy we have to put into a thing in order to warm it up by a certain temperature.

Bernoulli and Clausius showed that you could associate the speed of gas particles with things like pressure and density and therefore, temperature. This meant that we could use speed distributions, like Maxwell’s, to calculate specific heats. So people did aaand just couldn’t get it to fit experiments, no matter what.

At some point Maxwell gave up and said:

Here we are brought face to face with the greatest difficulty which the molecular theory has yet encountered.

Time would show, that to get it right, we would need a completely new approach to physics and the microscopic world, a quantum approach. However, that’s a story for another time.

Right… time… time would prove to be the most important problem of all.

The demon manifests

While all of this kinematic story of thermodynamics was unfolding, an elephant walked into the room and its presence was ever more oppressive.

You see, everything we knew about kinematics told us that we could reverse the flow of time and arrive at the starting point.

For example, drop something and it hits the floor, releasing kinetic energy. However, you can always take that same energy to launch it up, so that it flies back into your hand.

Yet, thermodynamical systems don’t seem to behave this way. Hot things heat cold things, but not the other way around, which is the essence of the second law of thermodynamics. As we’ve witnessed here, entropy must increase.

Finally, J.J. Loschmidt (aka JJ Cool L) came out and said that this cannot be. The second law of thermodynamics, as stated by Sadi Carnot, Rudolf Clausius and others, is absolute and the kinematic approach is likely a mistake.

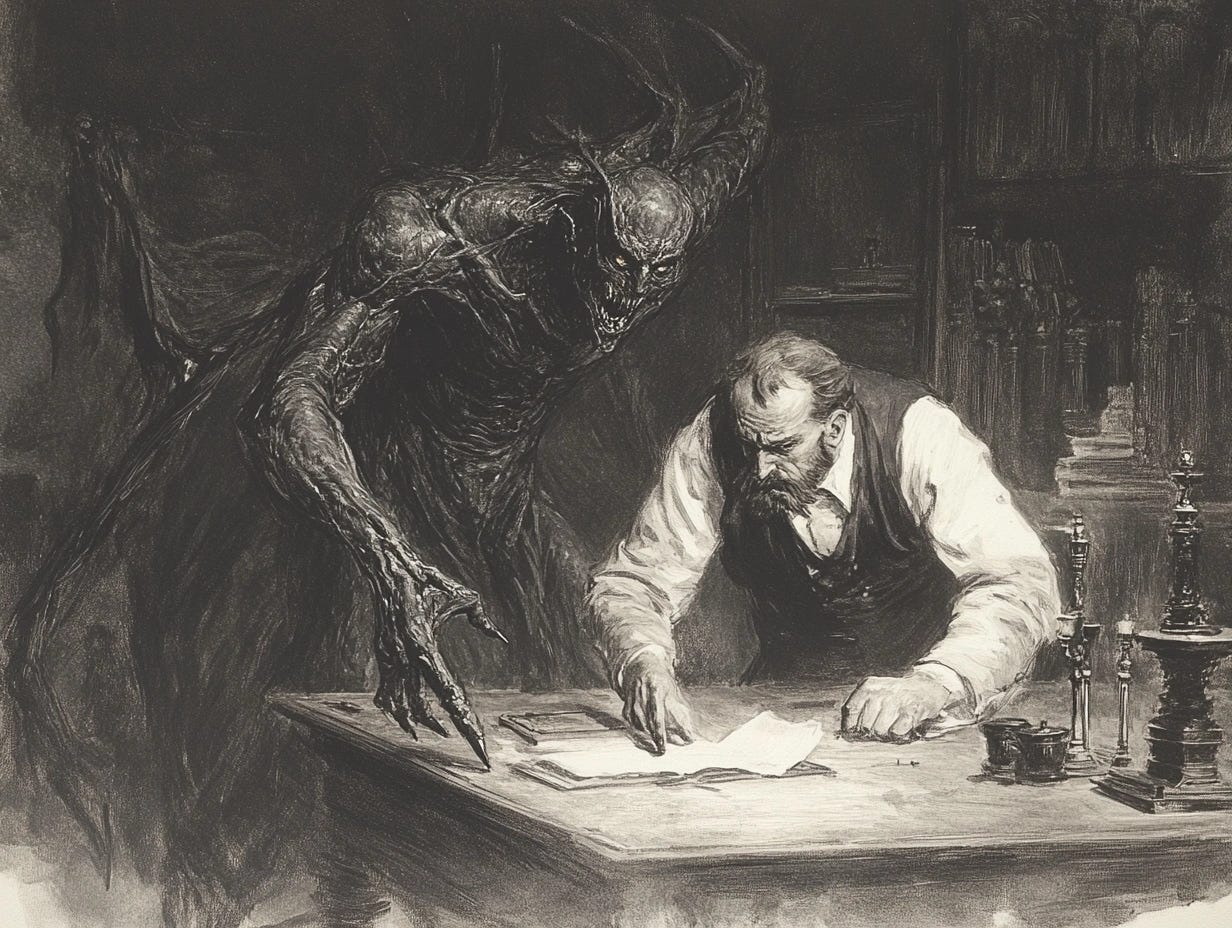

Maxwell was well aware of this problem and in his desperation, he turned to the occult. In a dark ritual he summoned a demon to help him resolve this catastrophe (totally true, trust me bro). However, as these stories go, the demon only made things worse.

We are talking about a powerful entity here, one which could steer the flow of matter on a molecular level. Here’s how its trickery unfolded.

You could enclose some gas in a box. The gas would have some temperature, the same everywhere in the box. That temperature would translate into the average speed of the particles making up the gas. Each particle’s speed would also fit nicely into the recently developed Maxwell’s distribution.

Now, the demon would come in and place a demonic wall in the middle of the box. That wall controls the fate of each particle approaching it. In its sinister design the demon would allow the passage of only fast particles to one side and only slow particles to the other side, like so:

As you can see, the demon has accomplished the impossible. It made one part of the gas hotter than the other, at seemingly no cost. This was a direct violation of the second law of thermodynamics.

At this point, even Maxwell started doubting his own work and had to take a break. Perhaps this kinematic view on matter and heat was a dead end, after all…

Entropy always wins

When it seemed that all hope was lost, statistics would once again prove to be the answer. This time it was delivered by Maxwell’s student - Ludwig Boltzmann.

B-mann accepted that we cannot realistically expect to track every particle’s speed or to observe their rearrangements in space. What we can do however, is to look at the outcomes of those rearrangements at a macroscopic scale.

For example, when in a room the air particles smash around all over the place and that manifests as room temperature. This is a macro thing that we can observe. Now, if all of a sudden the particles decided to escape into one of the corners of the room, we would begin to suffocate - that’s also a thing to observe.

This is cool in itself, but no cigar. The thing that makes it spectacular is when we realize that multiple different microscopic arrangements may appear as the same macroscopic picture. After all, the temperature of the room is constant, but the air molecules change their positions and speeds all the time.

Now, all we need to add is to say, that no micro arrangement is special or more likely than another and we have our answer.

Do you see now? To visualize this, let’s look at it through Boltzmann’s eyes. He had this funny thing, where he really didn’t like continuous things, like integrals. For him, these were not well defined as underneath we are adding discrete elements to each other anyway. Therefore, why not just start with a discrete view in the first place?

Let’s take a room fill it with gas (I take pride in avoiding Holocaust jokes, so far) and pretend that particles of that gas can only occupy positions within a grid. Also, in order not to overwhelm ourselves, we will not be tracking the speed of the molecules. Here’s how it looks:

Air spread out evenly in a room, at some temperature, can be represented by a bajillion different combinations of particles. It doesn’t matter, as on a macroscopic scale, these possibilities are indistinguishable.

What is distinguishable is all molecules of air suddenly escaping into a corner. However, this can be achieved through only maybe a few combinations.

The molecules themselves don’t care. They can choose either to be spread out or in the corner. But, if the number of choices on how to be spread out is so outrageously larger, than how to be in the corner, then choosing the corner is so unlikely that it is virtually 0.

So yes, in principle you could reverse the time on every individual molecule. However, from a statistical perspective, this reversal will never happen.

And yes, it’s technically safer to stand in the corner.

But what does this have to do with entropy, you ask? Well, Boltzmann defined his own version of that as:

where k is a constant (now called the Boltzmann constant) and Ω is the number of the possible arrangement of molecules. The logarithm is there to make some probabilistic calculations easier, which is standard procedure in statistics. In order not to get booked by the history police, I need to say that Boltzmann didn’t write that form of the equation himself. That came later, thanks to Max Planck. Am I free to go now, officer?

So, when the molecules where spread out throughout the room, there where many possibilities - Ω was large and so was S. When the molecules retreated into the corner, there was only one possibility, so a small S.

Since the corner case never happens, entropy never decreases, so just like Clausius said.

And that, kids, is how you ensure that kinematics can be applied to gasses without violating the second law of thermodynamics.

Ok, but what does Boltzmann’s S = k*logΩ has to do with Clausius’ S = Q/T? Although it may not look like it, these are equivalent, but it takes a bit of work to show it.

Exorcism

Before we go, we still have some unfinished business - Maxwell’s demon. It’s still running around and we need to banish it to where it come from.

That exorcism will require looking closer at what the demon is doing. The foul creature was dictating the movement of fast and slow particles at a whim. It seemed to decrease entropy and didn’t pay any price for it.

Or did it?

Being the mad scientists that we are, we tried to rebuild the demon in a lab multiple times. What we’ve learned is that even demons have to pay.

“Knowing“ whether particle is fast or slow represents an amount of information. Accumulating that information requires mental effort from the demon. You might say it requires work. As we’ve learned over out time together here at Physics Rediscovered, doing absolutely anything, even having a thought, is a process that will increase entropy.

The overall outcome for the demon is an increase in entropy after all. Like I said, entropy always wins.

So: In the name of a random Universe and entropy, begone demon! The power of statistics compels you! The power of statistics compels you! The power…!

I dunno, it just doesn’t have that ring to it.